Retrieval-Augmented Generation (RAG) and Large Language Models for conservation

The urgency to conserve biodiversity

Biodiversity is crucial for maintaining ecosystem stability, supporting food security, and preserving natural heritage. However, human activities, such as deforestation, pollution, and climate change, are driving species to extinction at an alarming rate. The loss of biodiversity not only threatens the survival of countless species but also undermines the resilience of ecosystems to environmental changes. Immediate action is needed to conserve biodiversity and ensure the health and sustainability of our planet.

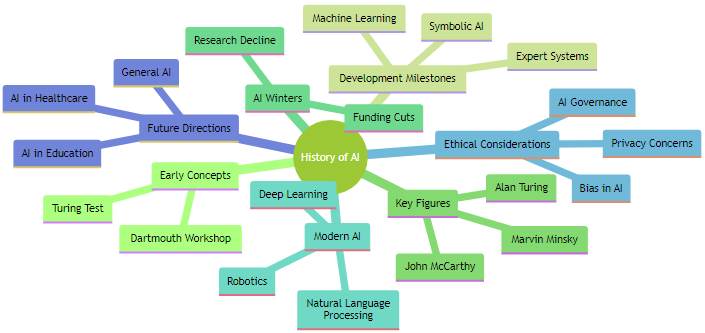

The AI revolution

The generative AI revolution has brought forth remarkable advancements, significantly transforming industries and daily life. As of 2023, generative AI models like GPT-4 have been trained on datasets containing hundreds of billions of words, showcasing their capacity to understand and generate human-like text. These models power applications in content creation, from automated news articles and marketing copy to personalized customer interactions, contributing to an estimated $15.7 trillion boost in global GDP by 2030 due to AI technologies. Moreover, the adoption of generative AI in healthcare is accelerating, with AI-driven diagnostic tools achieving accuracy rates comparable to human experts, thereby enhancing patient outcomes and reducing costs. In creative industries, AI-generated art, music, and literature are gaining traction, sparking debates about authorship and originality. The sheer scale and impact of the generative AI revolution underline the urgency for robust governance and ethical considerations to harness its potential while mitigating risks.

LLM hallucination

Large Language Models (LLMs) like GPT-4 have shown remarkable capabilities in understanding and generating human-like text. However, LLMs can sometimes produce outputs that are factually incorrect or misleading, a phenomenon known as "hallucination." In conservation efforts, ensuring the accuracy and reliability of AI-generated information is critical. Mitigating hallucinations involves using robust training datasets, implementing validation mechanisms, and integrating human expertise to review and correct AI outputs.

This phenomenon is particularly concerning when AI systems are used in critical areas such as healthcare, law, and information dissemination.

Causes of AI Hallucination

Inadequate Training Data: AI models rely on large datasets for training. If these datasets are incomplete, biased, or contain errors, the AI may generate incorrect information.

Complex Interactions: The complexity of AI models can lead to unexpected interactions between different components, resulting in hallucinations.

Contextual Misunderstanding: AI may lack the ability to fully understand the context of a query, leading to responses that are technically plausible but contextually irrelevant or incorrect.

RAG and LLM

Retrieval-Augmented Generation (RAG) combines the strengths of LLMs and information retrieval systems. In RAG, a model retrieves relevant documents or data from a large corpus and uses this information to generate more accurate and contextually relevant responses. This approach enhances the reliability of LLM outputs by grounding them in factual information and providing references to source materials. RAG can be particularly useful in conservation by ensuring that AI-generated insights are based on the latest and most relevant scientific data.

Retrieval-Augmented Generation (RAG) is a promising approach to reducing hallucinations in large language models (LLMs). By integrating information retrieval mechanisms with generative models, RAG enhances the factual accuracy of AI-generated content. Instead of solely relying on the model's internal parameters, RAG systems retrieve relevant documents or data from a vast corpus and use this external information to ground the generated responses. This process ensures that the outputs are based on verifiable sources, thereby significantly reducing the likelihood of generating incorrect or nonsensical information. By combining the strengths of retrieval and generation, RAG provides a more reliable and contextually accurate AI output, which is crucial for applications requiring high levels of precision and trustworthiness.

Use cases

Report Generation

AI can automate the generation of comprehensive conservation reports. By analyzing scientific literature, environmental data, and field reports, RAG-powered systems can produce detailed documents that summarize current biodiversity status, threats, and conservation actions. These reports can be tailored for policymakers, researchers, and the general public, making complex information accessible and actionable.

Biodiversity Atlases

Biodiversity atlases are critical tools for mapping species distributions and understanding ecological patterns. AI can enhance these atlases by processing large datasets from various sources, including satellite imagery, field observations, and citizen science projects. RAG systems can update atlases in real-time, providing accurate and up-to-date information on species distributions and habitat changes.

Impact Assessment

Environmental impact assessments (EIAs) are essential for evaluating the potential effects of development projects on biodiversity. AI can streamline the EIA process by analyzing historical data, modeling ecological impacts, and generating detailed assessment reports. RAG-powered systems can ensure that assessments are based on the most relevant and recent data, improving the accuracy and reliability of EIA outcomes.

The need for AI governance is paramount as artificial intelligence increasingly permeates various aspects of society and industry. Without robust governance frameworks, the rapid advancement of AI technologies can lead to unintended consequences, such as biased decision-making, invasion of privacy, and lack of accountability. Effective AI governance ensures that AI systems are developed and deployed ethically, transparently, and responsibly. It addresses critical issues like data protection, algorithmic fairness, and the societal impacts of automation. Moreover, governance frameworks help build public trust in AI by establishing clear standards and regulations that safeguard individual rights and promote equitable outcomes. In a world where AI decisions can significantly influence economic opportunities, healthcare, law enforcement, and more, establishing comprehensive AI governance is essential to prevent harm, promote social good, and ensure that the benefits of AI are distributed fairly across all segments of society.

At Natural Solutions, we are at the forefront of leveraging Large Language Models (LLMs) to drive innovative conservation efforts. If you're looking to enhance your conservation projects with cutting-edge AI technology, we invite you to partner with us. Our expertise in integrating LLMs can provide you with unparalleled insights, efficient data analysis, and powerful tools for sustainable environmental management. Don’t miss the opportunity to transform your conservation strategies with AI. Contact us today to learn more about how we can help you achieve your conservation goals. Together, we can make a significant impact on preserving our planet's biodiversity.